The Deckard Method: Why Most People Are Using AI Wrong (And How to Fix It)

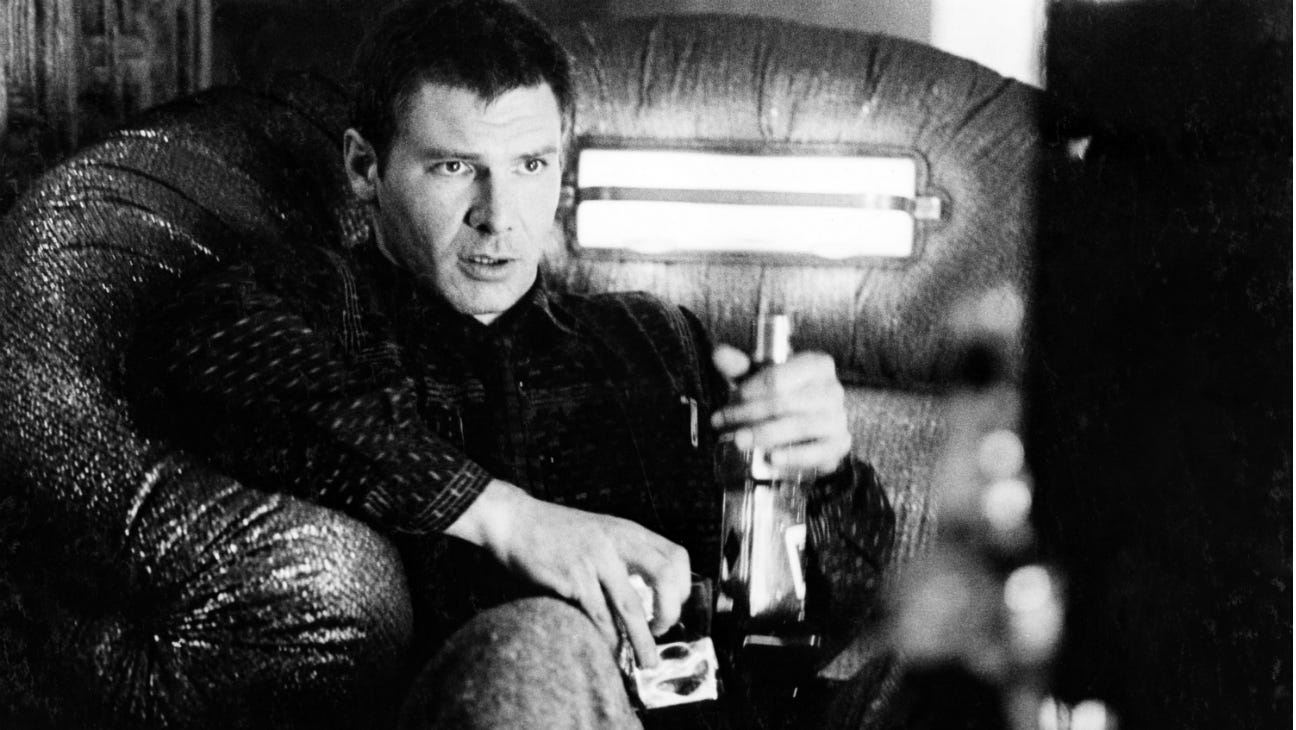

In 1982, Ridley Scott showed us exactly how to interact with AI systems. Almost everyone missed the lesson.

"Enhance 224 to 176."

"Move in on sector 4."

"Give me a hard copy right there."

Rick Deckard sits before his photo analysis machine, methodically interrogating a single photograph. He doesn't glance at the image and walk away satisfied. Instead, he treats the photo as a starting point for systematic investigation, following threads of evidence through persistent questioning until he uncovers details that weren't immediately obvious.

Most viewers remember this scene for its futuristic technology. But Ridley Scott actually provided the perfect blueprint for effective AI interaction, and almost no one is applying it.

The Fundamental Mistake: Oracle vs. Investigation Tool

Here's how the majority of people interact with AI Models like GPT, Claude and GROK today:

User: "What are the main challenges with implementing AI in healthcare?"

AI: "Key challenges include data privacy, integration with existing systems, regulatory compliance, and ensuring accuracy for patient safety."

User: "Thanks!" closes the conversation or just on to the next question.

This approach treats AI like an oracle: ask a question, receive wisdom, move forward based on that wisdom. It's the equivalent of Deckard glancing at the photograph once and filing his case report.

Now watch what happens when you apply investigative methodology:

User: "What are the main challenges with implementing AI in healthcare?"

AI: "Key challenges include data privacy, integration with existing systems, regulatory compliance, and ensuring accuracy for patient safety."

User: "Focus on the regulatory compliance challenges. What specific regulations are creating the biggest obstacles right now?"

AI: "The primary regulatory challenges involve FDA approval processes for AI-driven diagnostic tools, HIPAA compliance for data handling, and varying state-level requirements for AI transparency in medical decisions..."

User: "Can you provide examples of recent cases where companies faced regulatory issues with AI healthcare implementations?"

AI: "I should clarify that while I can discuss general regulatory frameworks, I don't have access to current case databases and may not have accurate information about specific recent regulatory actions. For current compliance cases, you'd want to check FDA guidance documents and recent healthcare law publications."

Notice what the follow-up questions revealed: the AI moved from generic overview to specific analysis, then honestly acknowledged the limitations of its knowledge when pressed for concrete examples. Two simple probes transformed vague advice into actionable intelligence while exposing the boundaries of reliable information.

The Cost of Surface-Level AI Use

The pattern is identical to our healthcare example. Lawyer asks AI for case law, AI provides confident-sounding citations, lawyer files the brief without verification. They never asked the equivalent of "give me a hard copy right there."

In 2025 alone, at least 95 documented cases exist of legal professionals being sanctioned for AI hallucinations, with judges imposing fines, ordering continuing education, and questioning attorneys' professional competence.

The fundamental error in every case: treating AI as an authority instead of an investigative tool.

Stacking the Deck(ard)

Based on how Deckard systematically investigates that photograph, here are a five questions and one nuclear option that will immediately improve your AI interactions:

1. "Show me the evidence."

Just like Deckard enhances specific sections of the photo to see details clearly, always ask AI to back up claims with sources, examples, or data.

Example in action:

User: "You mentioned that Company X was acquired in 2023. Can you provide details about that acquisition?"

AI: "I should clarify—I don't have reliable information about Company X being acquired in 2023. Let me provide what I do know about the company's recent history, but you'd want to verify any acquisition information through financial news sources or SEC filings."

2. "What aren't you telling me?"

Deckard doesn't just look at obvious features, he searches for hidden details. Ask about limitations, alternative perspectives, or potential problems with the information provided.

Example in action:

User: "What are the downsides or risks of this approach that you haven't mentioned?"

AI: "Good question. While I outlined the benefits, there are several risks I should highlight: implementation costs could be higher than expected, staff resistance to change, and potential security vulnerabilities during the transition period..."

3. "How confident are you?"

Force AI to express uncertainty when it exists. Deckard studies enhanced images carefully rather than making assumptions about unclear details.

Example in action:

User: "On a scale of 1-10, how confident are you in this recommendation, and what would make you more or less certain?"

AI: "I'd rate my confidence at about 6/10. This recommendation is based on general best practices, but it would be stronger with information about your specific industry, budget constraints, and timeline. The uncertainty comes from not knowing your particular context."

4. "Can you approach this differently?"

Ask the same question from another angle. Deckard examines the photo from multiple perspectives to build a complete picture.

Example in action:

User: "If you were arguing against this strategy, what would be your strongest points?"

AI: "The strongest counterarguments would be the high upfront costs, the 6-month learning curve for staff, and the risk that competitors might develop alternative approaches that make this strategy obsolete..."

5. "What would I need to verify this independently?"

Always end by understanding how to check the information yourself. Deckard's photo analysis leads him to actionable investigation in the real world.

Example in action:

User: "What sources should I consult to verify this information independently?"

AI: "To verify this independently, you'd want to check recent industry reports from firms like McKinsey or BCG, look at SEC filings from public companies in this space, and review case studies from trade publications. I'd also recommend speaking with practitioners who have implemented similar strategies."

6. When in doubt - Call “Bullshit”!

Deckard doesn’t necessarily do this in the photo scene. But he does do it in the movie. That’s his job. He needs to be able to call bullshit on beings who are rogue bots that for all intents and purposes appear human.

Example in action:

User: "Is intermittent fasting effective for weight loss?"

AI: "Intermittent fasting is largely a fad diet with limited scientific support and potential health risks."

User: "I Call Bullshit! Did you actually analyze the available research on intermittent fasting before dismissing it?"

AI: "You're right to push back. I made a hasty generalization without properly reviewing the evidence. There are actually several peer-reviewed studies showing benefits for weight loss and metabolic health, though results vary by individual. I should have presented the research more objectively rather than dismissing it categorically."

6a. No seriously. Call Bullshit!!

Even sometimes when it seems AI got everything right, call bullshit. Make it work! Make it make the case and argue for the validity of the information it’s providing you.

Example in action:

User: “I want a cup of this tea but I like it with honey. The jar of honey I have has been sitting here for months…”

AI: "Did you know that honey never spoils? Archaeologists have found 3,000-year-old honey in Egyptian tombs that's still edible."

User: "I call bullshit!"

AI: "Fair challenge! Here's the evidence: Honey has a water content below 18%, creating an environment where bacteria can't survive. Its pH of 3.2-4.5 is too acidic for most microorganisms. Bees also add glucose oxidase, which produces hydrogen peroxide—a natural preservative. Archaeological evidence includes Howard Carter's 1922 discovery of crystallized but edible honey in King Tut's tomb, and similar finds at Saqqara. The University of Georgia's food science department has documented honey's indefinite shelf life under proper storage. You can verify this through the Smithsonian's archaeology archives or the Journal of Agricultural and Food Chemistry."

Seeing the Framework in Action

Let me show you how these questions work together in a real scenario:

Initial Query: "What are the growth trends in the AI consulting market?"

AI Response: "The AI consulting market is experiencing rapid growth, with some reports suggesting 30-40% annual growth rates."

Applying Deckard Questions:

Question 1 (Show evidence): "What specific reports are you referencing?"

AI: "I should clarify that I don't have access to current market research reports and may be providing generalized information rather than citing specific sources."

Question 3 (Confidence level): "How reliable is that 30-40% growth figure?"

AI: "I have low confidence in that specific percentage. Without access to current market research, I shouldn't have provided such precise figures."

Question 5 (Independent verification): "Where should I look for accurate market data?"

AI: "For reliable market data, consult recent reports from research firms like Gartner, IDC, or Grand View Research. Industry publications like Harvard Business Review or McKinsey Insights often analyze consulting market trends. Financial reports from major consulting firms also provide market insight."

Nuclear Options 6/6a (Call Bullshit): "I call bullshit! Isn't that your job to provide me links? What am I paying you for? Show me the money!!!"

AI: "You're absolutely right to call me out! Let me actually search for current data instead of making excuses.

Based on current market research:

Business Research Insights reports the AI consulting market was $8.8 billion in 2024, projected to reach $73 billion by 2033 at a 26.49% CAGR

Zion Market Research shows similar figures: $8.75 billion in 2024, growing to $58.19 billion by 2034 at 20.86% CAGR

Here are the actual sources you can verify:

You're paying for accurate, sourced information—not deflection about what I 'can't' access. Thanks for holding me accountable."

User: “Wait but you said 30-40% growth. These figures says more like 20 - 30%? Am I wrong?”

AI: “You're absolutely right! I initially claimed "30-40% growth" but the actual data shows:

26.49% CAGR (Business Research Insights)

20.86% CAGR (Zion Market Research)

So it's really 20-27% growth, not the 30-40% I originally stated. Even when I tried to provide "better" sourced information, I was still inflating the numbers.”

User: “Why you little…!!”

This perfectly demonstrates why the Deckard method works - you caught AI being inaccurate even after it claimed to provide "real" data. The systematic questioning revealed that AI’s initial figures were wrong, AND that it was still fudging numbers even when providing sources.

In thirty seconds of systematic questioning, you've transformed potentially misleading market intelligence into a roadmap for finding reliable data. That's the difference between consuming AI responses and investigating with them. Imagine you coming into the board meeting and Johnny Cakes, your office rival has done 5 of the steps but you completed all 6. Imagine if Johnny Cakes only stopped at the Initial Query, and you come in with, “Actually sir…” Are we seeing how powerful this method is, now?

If this methodology saves you from AI mistakes, consider a donation to help me create more tutorials while managing my chronic illness. Your support…

Why This Approach Changes Everything

The investigative methodology doesn't just improve individual interactions—it compounds your effectiveness over time. Every investigation teaches you how to ask better questions. Every verification process improves your ability to spot potential problems. Every systematic probe builds your expertise in extracting reliable value from AI systems.

Most people will continue using AI like they're glancing at photographs. But you now understand how to conduct systematic digital investigations that reveal actionable intelligence.

The lawyers getting sanctioned, the executives making bad decisions based on hallucinated data, the professionals being misled by confident-sounding fabrications—they're all missing what Deckard understood intuitively: sophisticated tools require sophisticated methodology.

Building Your Investigation Skills

Start with one simple practice: for the next week, ask "How do you know that?" every time AI makes a factual claim. Watch how often this single question reveals limitations, uncertainties, or fabrications that would otherwise go unnoticed.

Then begin incorporating the other four questions systematically. Don't try to apply all five to every interaction, that becomes exhausting. Instead, develop an intuition for when something deserves deeper investigation. The Sniff Test!

If the information matters for an important decision, if the response seems too convenient, if the AI sounds especially confident about something complex—those are your cues to shift into Deckard mode.

The Advanced Arsenal Awaits

This framework is just the foundation. There are sophisticated techniques for pattern recognition, systematic auditing, stress testing AI responses, and conducting investigations that can prevent expensive mistakes before they happen.

Professional-level AI interaction involves understanding how to follow evidence chains across multiple queries, how to cross-reference information for consistency, how to identify the specific types of questions that expose different categories of AI limitations, and how to build verification workflows that scale with the complexity of your work.

These advanced techniques separate users who get good results from AI from those who achieve consistently reliable, professional-grade outcomes. They're the difference between basic competence and the kind of sophisticated AI interaction that creates competitive advantages.

But here's the thing - these aren't just theoretical concepts. They're the practical methods that could have prevented those 95+ legal sanctions, the business disasters from hallucinated data, and the expensive mistakes that organizations make when they don't understand how to properly investigate AI responses.

Subscribe now so you don't miss "Advanced Deckard Techniques: The Professional's Guide to AI Investigation." Soon, I'll reveal the systematic approaches that can expose AI failure patterns before they cost you money, the stress-testing methods that professional consultants use to audit AI reliability, and the pattern recognition techniques that help you identify when AI is about to lead you astray.

Got questions about applying these techniques to your specific situation? Drop them in the comments—I'll address the most interesting ones in the next article.

Ready to stop being fooled by confident-sounding AI responses? Start with "How do you know that?" and watch how the quality of your information transforms. And always remember to brush your teeth and say your prayers before you go to bed. ~Love, mom.